Can you do better than top-level AI models on these basic vision tests?

Abstract analysis that is trivial for humans often stymies GPT-4o, Gemini, and Sonnet.

Enlarge / Whatever you do, don't ask the AI how many horizontal lines are in this image. (credit: Getty Images)

In the last couple of years, we've seen amazing advancements in AI systems when it comes to recognizing and analyzing the contents of complicated images. But a new paper highlights how many state-of-the-art "vision learning Models" (VLMs) often fail at simple, low-level visual analysis tasks that are trivially easy for a human.

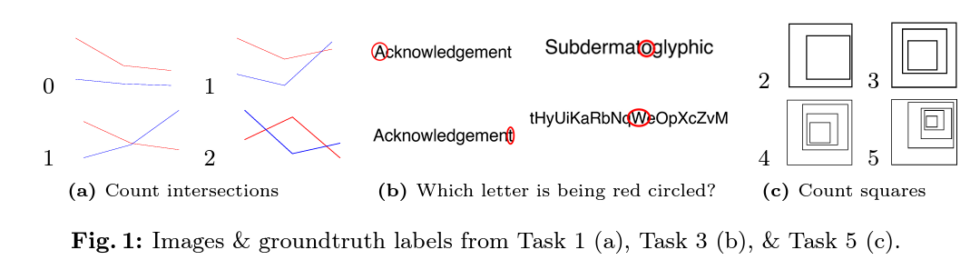

In the provocatively titled pre-print paper "Vision language models are blind" (which has a PDF version that includes a dark sunglasses emoji in the title), researchers from Auburn University and the University of Alberta create eight simple visual acuity tests with objectively correct answers. These range from identifying how often two colored lines intersect to identifying which letter in a long word has been circled to counting how many nested shapes exist in an image (representative examples and results can be viewed on the research team's webpage).

-

If you can solve these kinds of puzzles, you may have better visual reasoning than state-of-the-art AIs. [credit: Rahmanzadehgervi, Bolton, Taesiri, Nguyen. ]

Crucially, these tests are generated by custom code and don't rely on pre-existing images or tests that could be found on the public Internet, thereby "minimiz[ing] the chance that VLMs can solve by memorization," according to the researchers. The tests also "require minimal to zero world knowledge" beyond basic 2D shapes, making it difficult for the answer to be inferred from "textual question and choices alone" (which has been identified as an issue for some other visual AI benchmarks).