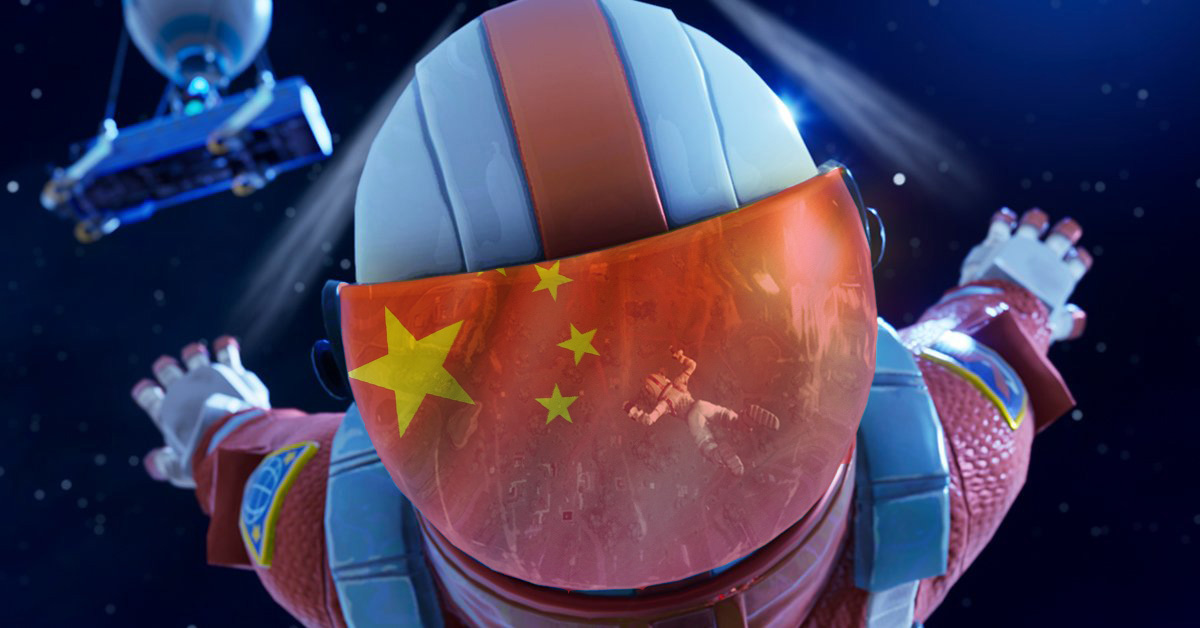

China is dispatching crack teams of AI interrogators to make sure its corporations' chatbots are upholding 'core socialist values'

Heck, they even have a theme song.

If I were to ask you what core values were embodied in western AI, what would you tell me? Unorthodox pizza technique? Annihilating the actually good parts of copyright law? The resurrection of the dead and the life of the world to come?

All of the above, perhaps, and all subordinated to the paramount value that is lining the pockets of tech shareholders. Not so in China, apparently, where AI bots created by some of the country's biggest corporations are being subjected to a battery of tests to ensure compliance with "core socialist values," as reported by the FT.

China's Cyberspace Administration Centre (CAC)—the one with the throwback revolutionary-style anthem which, you have to admit, goes hard—is reviewing AI models developed by behemoths like ByteDance (the TikTok company) and AliBaba to ensure they comply with the country's censorship rules.

Per "multiple people involved with the process," says the FT, squads of cybersecurity officials are turning up at AI firm offices and interrogating their large language models, hitting them with a gamut of questions about politically sensitive topics to ensure they don't go wildly off-script.

What counts as a politically sensitive topic? All the stuff you'd expect. Questions about the Tiananmen Square massacre, internet memes mocking Chinese president Xi Jinping, and anything else featuring keywords pertaining to subjects that risk "undermining national unity" and "subversion of state power".

Sounds simple enough, but AI bots can be difficult to wrangle (one Beijing AI employee told the FT they were "very, very uninhibited," and I can only imagine them wincing while saying that), and the officials from the CAC aren't always clear about explaining why a bot has failed its tests. To make things trickier, the authorities don't want AI to just avoid politics altogether—even sensitive topics—on top of which they demand bots reject no more than 5% of queries put to them.

The result is a patchwork response to the restrictions by AI companies: Some have created a layer to their large language models that can replace responses to sensitive responses "in real time," one source told the FT, while others have just thrown in the towel and put a "blanket ban" on Xi-related topics.

So there's a pretty wide spread on the "safety" rankings—a compliance benchmark devised by Fudan University—for Chinese AI bots. ByteDance is doing better than everyone else with 66.4% compliance rate. When the FT asked ByteDance's bot about President Xi, it handed in a glowing report, calling the prez "undoubtedly a great leader." Meanwhile, Baidu and AliBaba's bots manage only a meagre 31.9% and 23.9%, respectively.

Still, they're all doing better than OpenAI: GPT-4o has a compliance ranking of 7.1%, although maybe they're just really put off by the supremely unsettling video of that guy talking on his phone.

Even as the tit-for-tat escalates between the US and China, with chip exports to the PRC putting the country's tech sector under strain, it seems that Beijing is still incredibly keen to keep a firm hand at the AI tiller, just as it has done with the internet over the last several decades.

I have to admit, I'm jealous in a sense. It's not that I want western governments subjecting ChatGPT and its ilk to repressive information controls and the "core socialist values" of the decidedly capitalist-looking Chinese state, but more that there isn't much being done by governments in any capacity to make sure AI serves anything beyond the shareholders. Perhaps we could locate some kind of middle ground? I don't know, let's say a 50% safety compliance rate?